Key Takeaways

- GPT-5 needs a larger context window to be competitive

- Video input capability is crucial for GPT-5 success

- GPT-5 must improve response speed and logical reasoning for a major upgrade

GPT-5 has been a hot topic for quite a while now, and OpenAI CEO Sam Altman recently made comments regarding the future of the GPT model on Lex Fridman’s podcast. In that podcast, he stated that GPT-4 “kind of sucks” now and that he’s looking forward to what comes next. He refused to refer to it as “GPT-5”, but a recent report from Business Insider did name it as such, with people familiar with the LLM referring to it as “materially better” when compared to GPT-4.

Larger context window

Part of what makes Gemini so powerful

A context window is essentially how much an LLM can “see” at any given time, and part of what makes Gemini so powerful is its ability to have a context window of up to 10 million tokens. While the amount of memory required for that is absurd, a larger context window would still be amazing. GPT 4 has a context window of 32K, and GPT-4 Turbo bumps this up to 128K. That’s quite significant, but Google still has this number significantly beat with Gemini 1.5.

As already mentioned, there are memory limitations here that the company would need to figure out on the server side, but there are advancements in this area that could make it possible to do for end users.

Google’s Gemini 1.5 Ultra will need to compete with GPT-5, not GPT-4

Google’s Gemini 1.5 Pro model wowed us, and the Ultra model could be even better.

Video input

True multi-modality

GPT-4 with vision is a model that already exists, and it can interpret visual data to then use in decision-making. The problem is that it’s too slow to properly interpret multiple images quickly enough, meaning that video input is currently out of the question. It would be great if OpenAI made headway in this area, allowing GPT-5 to truly take video inputs into account. I’m hopeful, particularly as the company has been making decent strides in video AI in general, particularly with the reveal of Sora. Google is also working on video input with Gemini 1.5, and it’s looking promising.

OpenAI’s Sora isn’t the end of the world… yet

OpenAI’s new Sora video model isn’t the end of the world just yet, though what the future may hold isn’t clear.

Faster responses

GPT-4 is much slower than the competition

As time has gone on, GPT-4 has simply become too slow when it comes to generating responses. While part of that is almost certainly down to the barrage of traffic that OpenAI gets on a daily basis, competitors from the likes of Google and Anthropic manage to respond much faster. OpenAI needs to improve response generation times, and hopefully, GPT-5 can be a more efficient model that can do that.

Personally, this is my biggest gripe with GPT-4 at the moment. Google’s Gemini Advanced especially is so much faster than what OpenAI can offer, to the point that I can generate a response that I know will be long on both services and Gemini will be finished a whole minute faster.

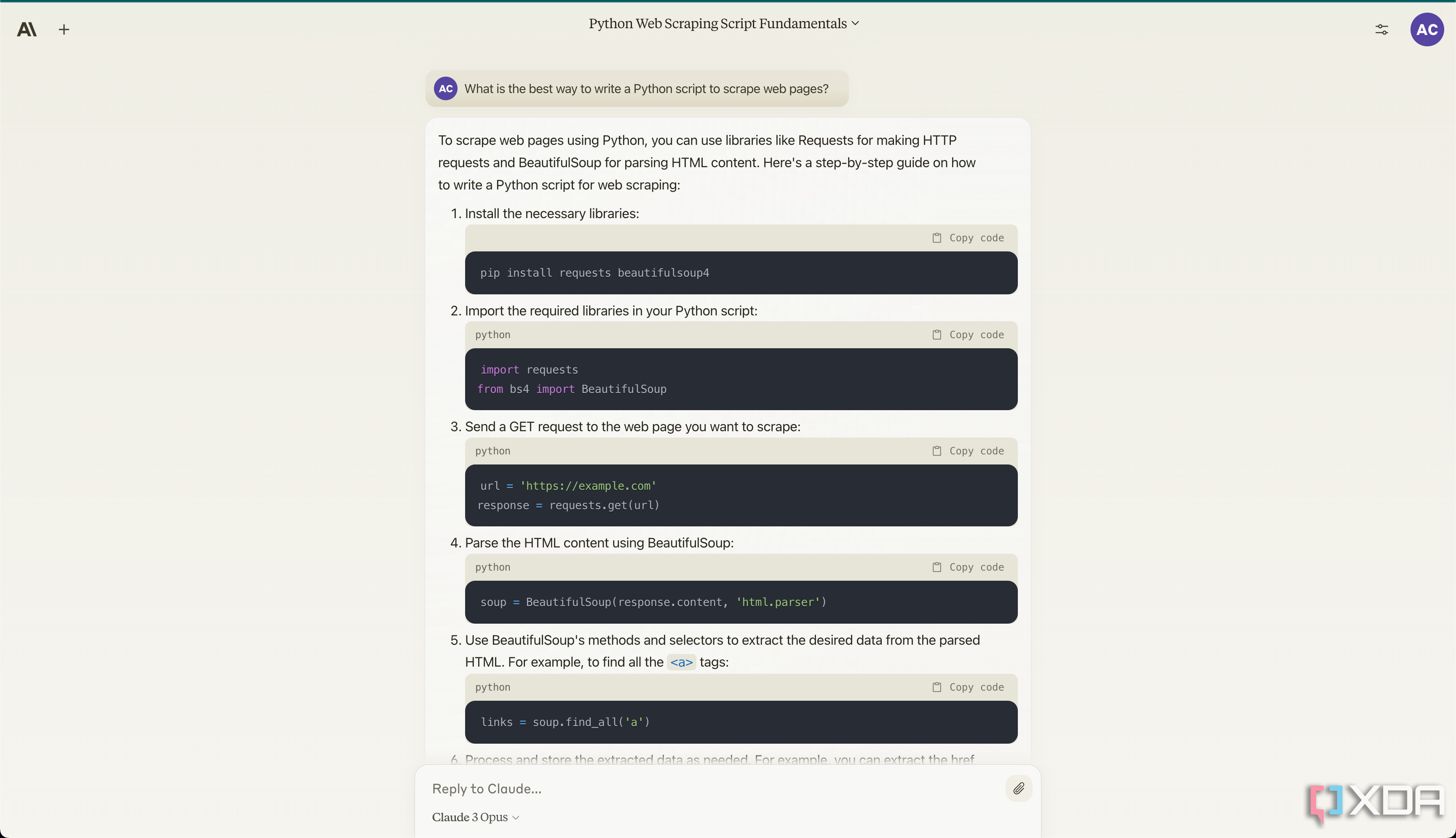

Improved logical reasoning

GPT-4 is starting to fall behind

Logical reasoning is difficult for any LLM, particularly as they are simply glorified pattern management algorithms. They can build responses based on things that they’ve seen before, but anything aside from that is a guess. In the case of mathematics, LLMs struggle because if a question isn’t in its training set, it simply guesses what the answer should be in order to fill in the gaps.

Logical reasoning is something that needs to be massively improved upon in order for OpenAI to gain another major advantage, as the likes of Google’s Gemini Advanced and Claude 3 Opus have managed to improve leaps and bounds in this area.

Microsoft and Google have 365 and G Suite, but what does OpenAI have?

Source: Microsoft

If you’re using Microsoft Copilot Pro or Google Gemini Advanced, you’re probably aware of the tool integrations that you get with either service. Copilot Pro has full Microsoft 365 integration, and Gemini Advanced has full Google Suite integration. Those are pretty big advantages to have over competitors, to the point that Copilot Pro is simply a better purchase for most people over ChatGPT Plus.

With GPT-5, it’d be nice to see that change with more integrations for other services. Given that plugin support seems to be waning in favor of custom GPTs, I’m expecting that the list of advantages that OpenAI has is beginning to dwindle, especially given that Copilot has custom GPTs, too. I’d love to see OpenAI partner with other companies to introduce exclusive features.

4 reasons Copilot Pro is better than ChatGPT Plus

Microsoft is using OpenAI’s models to offer the Copilot Pro subscription service, and still, it’s a better option than ChatGPT Plus.

GPT-5 will hopefully be big

These are some of the most important things I’m hopeful for when it comes to the next iteration of GPT, but to be honest, the company could take it in any direction. With Altman’s comments seemingly making it out that GPT-5 will be a major upgrade, we’re certainly hopeful, but there’s no guarantee that the company will manage to claw back the massive advantage it had over the rest of the industry when ChatGPT first launched.