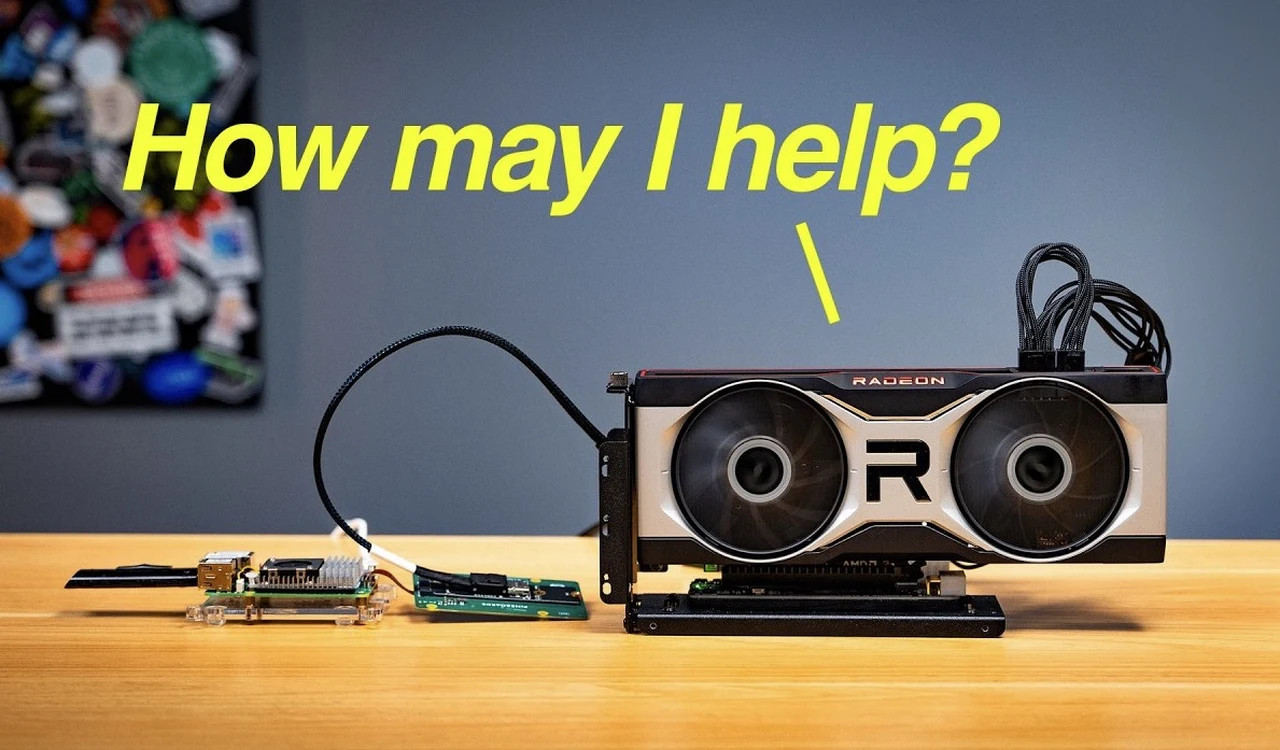

If you would like to run large language models (LLMs) locally perhaps using a single board computer such as the Raspberry Pi 5. You should definitely check out the latest tutorial by Geff Geerling, who provides insight into the exciting journey of setting up LLMs on a Raspberry Pi 5.

Imagine having the power of advanced AI at your fingertips, not just in a massive data center but right in your own home. For many tech enthusiasts, the idea of running LLMs on something as compact and affordable as a Raspberry Pi might sound like a dream. Yet, with the integration of AMD graphics cards for hardware acceleration, this dream is becoming a reality. Whether you’re a seasoned developer or a curious hobbyist, the potential to harness AI locally, with improved efficiency and cost-effectiveness, is within reach.

Running AI on A Pi 5

By using the processing prowess of AMD graphics cards, traditionally reserved for more robust systems, you can achieve remarkable performance improvements—up to 40 tokens per second, to be precise. This setup not only promises enhanced processing speeds but also offers a more energy-efficient alternative to traditional CPU setups.

TL;DR Key Takeaways :

- Running large language models on a Raspberry Pi 5 with AMD graphics cards enhances performance and offers a cost-effective solution for local AI applications.

- Integrating AMD graphics cards requires a PCI Express connection, a reliable power supply, and a GPU riser for seamless connectivity.

- GPU acceleration significantly boosts processing speeds and efficiency, achieving up to 40 tokens per second while reducing energy costs.

- Challenges include hardware constraints of consumer graphics cards and the need for custom Linux kernels for driver support on Raspberry Pi.

- This setup enables local AI applications, enhancing privacy and reducing cloud reliance, while encouraging community experimentation and innovation.

Setting Up Your Hardware

To harness the power of LLMs on a Raspberry Pi 5, the integration of AMD graphics cards is crucial. This setup requires careful consideration of several key components: PCI Express connection, Robust power supply, GPU riser for seamless connectivity and Pine boards for efficient power distribution.

The PCI Express connection serves as the vital link between the Raspberry Pi and the AMD graphics card, allowing high-speed data transfer. A GPU riser is essential for physically connecting the graphics card to the Raspberry Pi, making sure proper PCI Express connectivity.

Power management is critical in this setup. A reliable power supply must be capable of handling the combined energy demands of the Raspberry Pi and the graphics card. Using Pine boards can significantly simplify power distribution and connectivity management, creating a more stable and efficient system overall.

This configuration uses the processing capabilities of AMD graphics cards, typically found in more powerful systems, and adapts them for use with the compact Raspberry Pi platform. The result is a unique blend of affordability and performance that opens up new possibilities for AI experimentation and development.

Boosting Performance and Efficiency

The integration of GPU acceleration brings substantial performance improvements to LLM processing on the Raspberry Pi. Users can expect processing speeds of up to 40 tokens per second, a significant leap forward compared to CPU-only setups. This enhancement is particularly notable when dealing with the complex computational demands of large language models.

Beyond raw performance, GPU-accelerated systems often demonstrate improved energy efficiency. The specialized architecture of graphics cards allows for more efficient processing of AI workloads, potentially reducing overall power consumption. This efficiency not only contributes to lower energy costs but also aligns with growing concerns about the environmental impact of AI technologies.

The affordability of consumer graphics cards further enhances the appeal of this approach. By using readily available hardware, developers and enthusiasts can explore advanced AI technologies without the need for expensive, specialized equipment. This widespread access of AI resources has the potential to spark innovation and broaden participation in the field.

Raspberry Pi LLM Hardware Acceleration

Check out more relevant guides from our extensive collection on local AI setups that you might find useful.

Overcoming Challenges

While the benefits of GPU acceleration on a Raspberry Pi are clear, several challenges must be addressed:

- Hardware limitations of consumer graphics cards

- Driver compatibility issues

- Custom Linux kernel requirements

Consumer graphics cards, while powerful, may face constraints when running particularly large or complex models. It’s essential to carefully consider the specific requirements of your intended applications and choose a graphics card that balances performance with compatibility.

Driver support for AMD graphics cards on the Raspberry Pi platform can be challenging. Often, a custom Linux kernel is required to ensure proper compatibility and functionality. This process demands a certain level of technical expertise and can be time-consuming, particularly for those new to Linux system administration.

To mitigate these challenges, it’s advisable to thoroughly research various AMD graphics card options. Consider factors such as power consumption, physical size, and known compatibility issues with the Raspberry Pi platform. Online communities and forums can be valuable resources for identifying the most suitable hardware configurations and troubleshooting common issues.

Exploring Applications and Future Opportunities

The ability to run LLMs on a Raspberry Pi with GPU acceleration opens up a world of possibilities for local AI applications. Some potential use cases include:

- Privacy-focused home assistants

- Offline language translation tools

- Local content generation and analysis

By processing AI workloads locally, these applications can operate independently of cloud services, enhancing privacy and reducing reliance on external servers. This autonomy is particularly valuable in scenarios where data sensitivity or internet connectivity is a concern.

The broader implications for AI resource usage are significant. By demonstrating that powerful AI models can run on compact, energy-efficient hardware, this approach promotes more sustainable and accessible technology use. It challenges the notion that advanced AI capabilities are exclusively the domain of large-scale data centers or high-end workstations.

Community experimentation with similar setups is strongly encouraged. The collective efforts of developers, hobbyists, and researchers can drive further innovations in AI processing on small-scale devices. By sharing experiences, optimizations, and novel applications, the community can collectively push the boundaries of what’s possible with compact AI systems.

As you explore this technology, consider how it might be applied to solve real-world problems or enhance existing processes. The integration of AI capabilities into everyday devices has the potential to transform various aspects of our lives, from smart home systems to personalized education tools.

By engaging with this technology, you’re not just experimenting with hardware configurations; you’re contributing to the evolving landscape of AI and its integration into everyday life. The insights gained from these small-scale implementations could inform future developments in AI hardware and software, potentially leading to more efficient and accessible AI solutions for a wide range of applications.

Media Credit: Jeff Geerling

Filed Under: AI, Top News

Latest TechMehow Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, TechMehow may earn an affiliate commission. Learn about our Disclosure Policy.