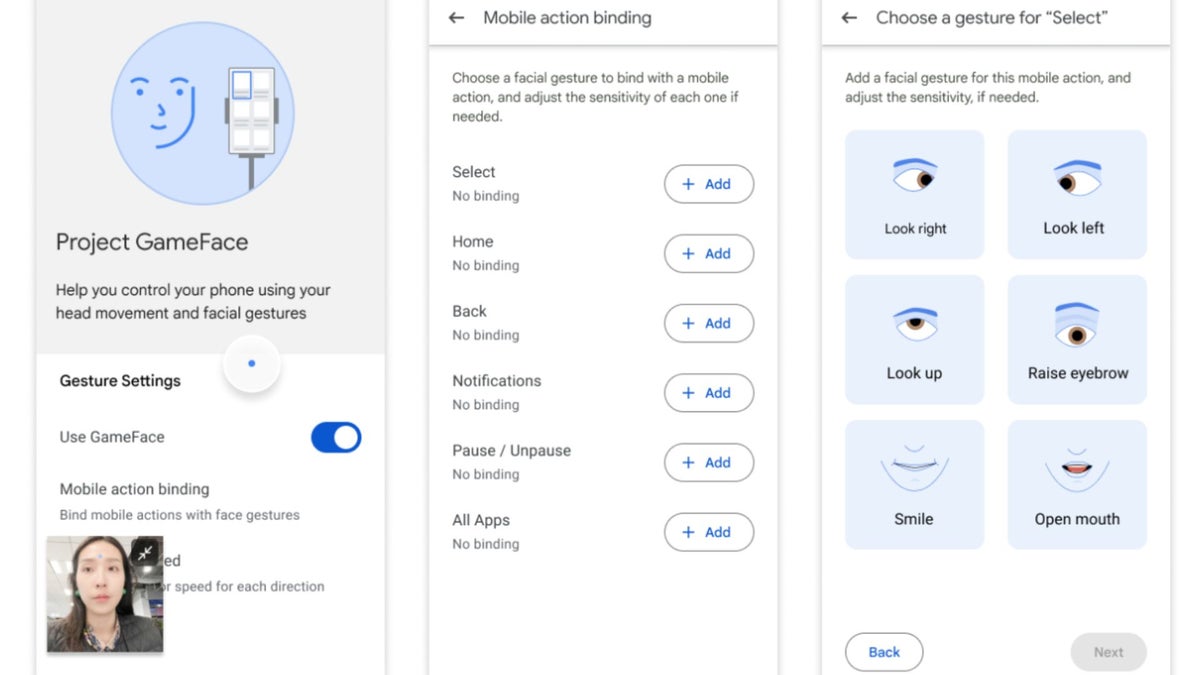

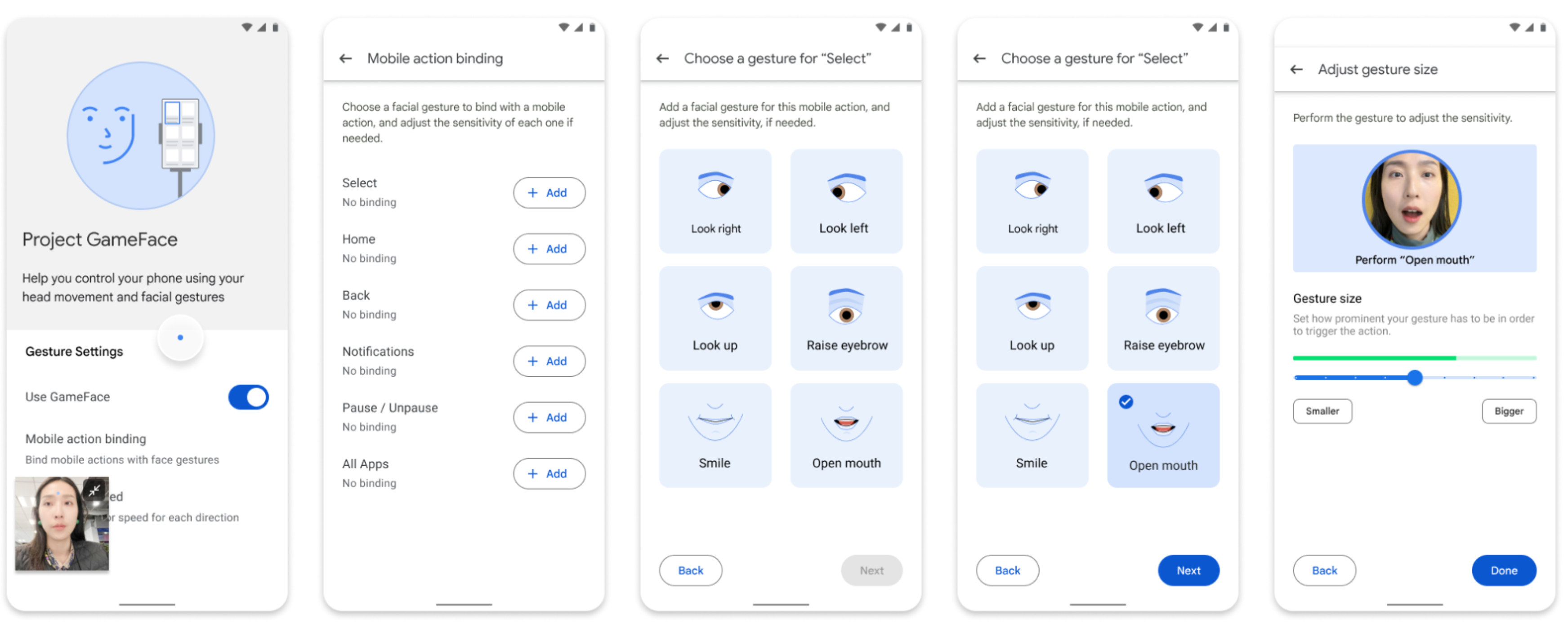

Powered by Android’s accessibility service, Google’s MediaPipe framework, and the device’s selfie camera, this feature lets users perform actions with just their facial expressions. For example, users can move the cursor by opening their mouths or raising their eyebrows, and much more. In essence, your facial and head movements become the drivers behind controlling the “cursor” or its Android touchscreen equivalent. It’s like your expressions are the remote control, translating into real commands. The company is throwing in gesture customization, supporting up to 52 gestures, and even allowing users to adjust the gesture size for triggering actions.

Image Credit–Google

Google says that its approach to creating Project Gameface for Android revolved around three fundamental principles:

- Empower individuals with disabilities by providing an alternative method to interact with Android phones.

- Develop a cost-effective solution that’s accessible to a wide audience, promoting widespread adoption.

- Draw from insights and guiding principles gained from the initial Gameface launch to ensure the product is user-friendly and customizable.

Right now, Project Gameface is a feature exclusively accessible to developers. It’s entirely in their hands whether they choose to integrate this feature into mobile games on Android. So, let’s hope for its widespread adoption, as it appears to be a smart move to enhance accessibility across the Android platform.