Against all odds, Intel Arc A770 runs Indiana Jones and the Great Circle at maximum settings better than Nvidia GeForce RTX 3080. That’s a $349 graphics card beating what was best ray tracing pixel pusher money could buy for $699 back in 2022. While the latter boasts a GPU that wipes the floor with the former, its rendering prowess can only do so much with its relatively piddly pool of VRAM. This isn’t the first game to highlight this problem, nor will it be the last, and it’s high-time manufacturers (Nvidia especially) acknowledge it with real change.

Before we dive into the particulars of Indiana Jones, some context. Nvidia’s GeForce RTX 4060 Ti, 4060, and AMD’s Radeon RX 7600 have all rightly received criticism for arriving with 8GB of VRAM. The problem with such specifications is that it simply isn’t enough to keep up with the growing prevalence of ray tracing and the increasing size of texture pools. This results in graphics cards that otherwise have more performance to give but simply can’t due to their memory capacity.

Case in point, GeForce RTX 4060 Ti 16GB and Radeon RX 7600 XT. These cards are practically identical to their 8GB counterparts in all but VRAM. Put them all in a drag race and the 16GB graphics cards will fly ahead at higher resolutions, as well as in games with swathes of ray tracing and large textures like A Plague Tale: Requiem. It’s for this reason that many continue to buy GeForce RTX 3060 and why Radeon RX 7700 XT continues to be the most affordable model I can recommend wholeheartedly, thanks to their 12GB of VRAM.

Indiana Jones and the Great Circle benchmarks by ComputerBase provide some vindication to my viewpoint. The outlet remarks that “graphics cards with 8GB and 10GB do not achieve playable results in WQHD,” but I’d argue this extends to 1080p as well. At FHD, GeForce RTX 3080 achieves an average frame rate of 36fps but 1% lows of 24fps indicate a less-than-ideal experience. Meanwhile, Arc A770 keeps a steady performance profile of 41fps on average and 1% lows of 32fps.

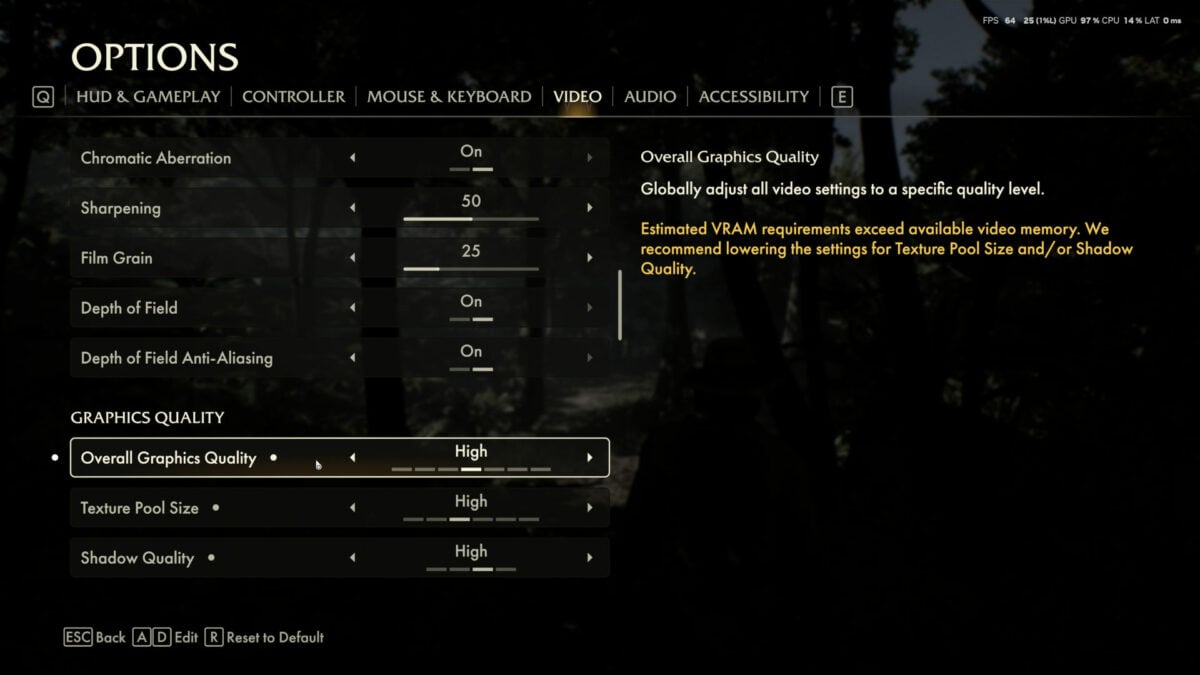

While my GeForce RTX 3080 Ti naturally fares better thanks to its 12GB of VRAM, the game still prompts me to dial back ‘Texture Pool Size and/or Shadow Quality’ as early as the ‘High’ preset. This is not the kind of value I expect from a three-year-old former $1,199 sub-flagship graphics card. I can only hope that GeForce RTX 4080 Super and RTX 4070 Ti Super are signs of Nvidia attempting to combat such issues.

Further cementing the need for larger pools of video memory, Digital Foundry finds that VRAM is the single most crucial specification to strong performance in Indiana Jones and the Great Circle. With tweaks to relieve pressure on the memory buffer, the game is otherwise surprisingly lightweight even on budget graphics cards. Nvidia DLSS and Frame Generation can also play their part in lightening the load on GeForce RTX 4060 series’ 8GB of VRAM, but Radeon and Arc users don’t enjoy such luxuries given Indy’s lack of support for FSR and XeSS.

I cannot stress enough that examples like this will only continue to grow in number with the passage of time. Ray tracing (and now path tracing) isn’t going away, nor is the march towards higher resolutions. Should GPU manufacturers wish to provide value to their customers across the price spectrum, we must see VRAM capacities rise without incurring a substantial price uplift. If we are to embrace a future where upscaling and frame generation is more the rule than exception, then it’s only right that these features have room to shine their brightest.

There’s still no official word from AMD or Nvidia on how much VRAM the most affordable Radeon RX 8000 or GeForce RTX 50 Series graphics card will boast. However, I hope that the muted reception towards its current budget offerings and examples like Indiana Jones and the Great Circle provide both companies reason to see sense. Failing that, Intel Arc B580 could well and truly become a budget champion with 12GB of VRAM, providing its performance holds up.