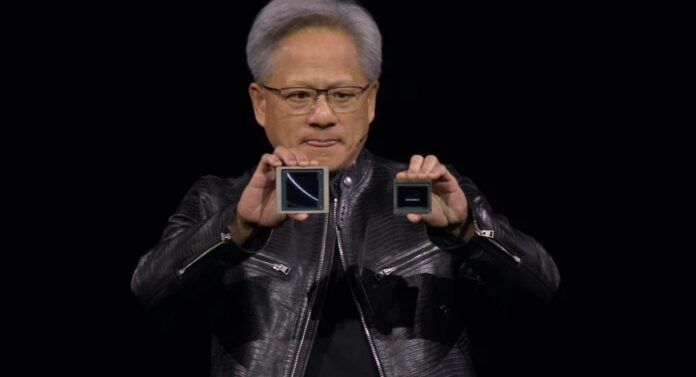

Nvidia’s next-generation Blackwell GPU for artificial intelligence has been revealed by CEO, Jensen Huang. The new chip’s cost will be similar to its predecessor while offering much higher performance and efficiency.

During the business show Squawk on the Street on CNBC, Nvidia boss, Jensen Huang, told host Jim Cramer that the brand’s upcoming Blackwell (B200) AI GPU will cost between $30,000 and $40,000 per unit. This is near the price of its predecessor, the Hopper H100 GPU. That said, this is just an approximation, as Nvidia prefers to sell complete systems instead of individual B200 GPUs. Therefore, Nvidia only lists DGB B200 and DGX B200 SuperPOD systems on its website. In other words, the final cost of owning an operational unit may be much higher.

According to Raymond James analysts, each B200 GPU costs Nvidia $6,000 to make, against $3,320 for the H100, adding that Nvidia will slot its new chip with a 50% to 60% premium over its predecessor. On the other hand, the brand’s CEO estimated that the chip cost about $10 billion to research and develop, saying that some new technologies had to be invented to make it possible.

Even though pricing may be higher, the B200’s performance will undoubtedly spark a lot of interest. According to Nvidia, the B200 brings 2x to 5x gains across all parameters compared to Hopper counterparts. This is possible thanks to its massive 204 billion transistors (104 billion on each compute die) and 192GB of HBM3E memory. To achieve this outstanding transistor count, Nvidia uses two compute dies connected together, built on TSMC’s 4NP process node.

Nvidia’s new chip is expected to be in very high demand as the artificial intelligence boom keeps growing up. Regardless of pricing, one suspects the Green Team will surely find entities with deep enough pockets. Hopefully, these efficiency and performance advancements trickle down to next-generation gaming GPUs.