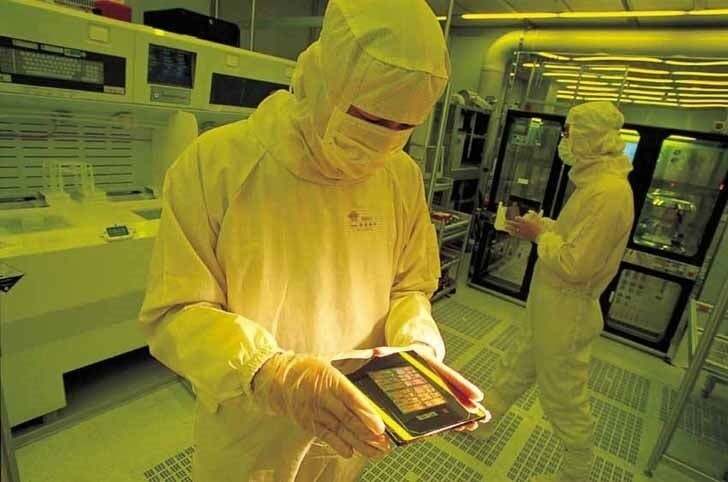

TSMC will reportedly build OpenAI’s in-house chip using its A16 Angstrom node. | Image credit-TSMC

So how much superior will TSMC’s A16 Angstrom node be compared to the 2nm chips that will precede them? At the same operating voltage, A16 Angstrom could be 8-10% faster while consuming 20% less power. Based on data from 2023, each query submitted to ChatGPT costs OpenAI 4 cents according to Bernstein analyst Stacy Rasgon. If ChatGPT use continues growing until it hits one-tenth the scale of Google Search, the company will need $16 billion worth of chips every year which could make this a very profitable venture for TSMC.

Earlier this year, a rumor circulated that TikTok parent ByteDance was developing an AI chip with its partner Broadcom. This chip would have been produced by TSMC using its 5nm process node. You might wonder how China-based ByteDance could get around U.S. sanctions to team up with Broadcom. Apparently, there is a loophole that allows the production and export of customized application-specific integrated chips (ASIC) to China.

ByteDance plans to use the in-house chip to run new powerful AI algorithms for TikTok and the Douyin app available in China. The company also has a chatbot it runs in China called Doubao. While waiting for TSMC to start profuction of its in-house chips, ByteDance still has some inventory of chips it purchased from NVIDIA before U.S. sanctions began.