TL;DR Key Takeaways :

- ReasonAgain is a new methodology developed to enhance AI reasoning, particularly in large language models, by using symbolic programs to test understanding beyond memorization.

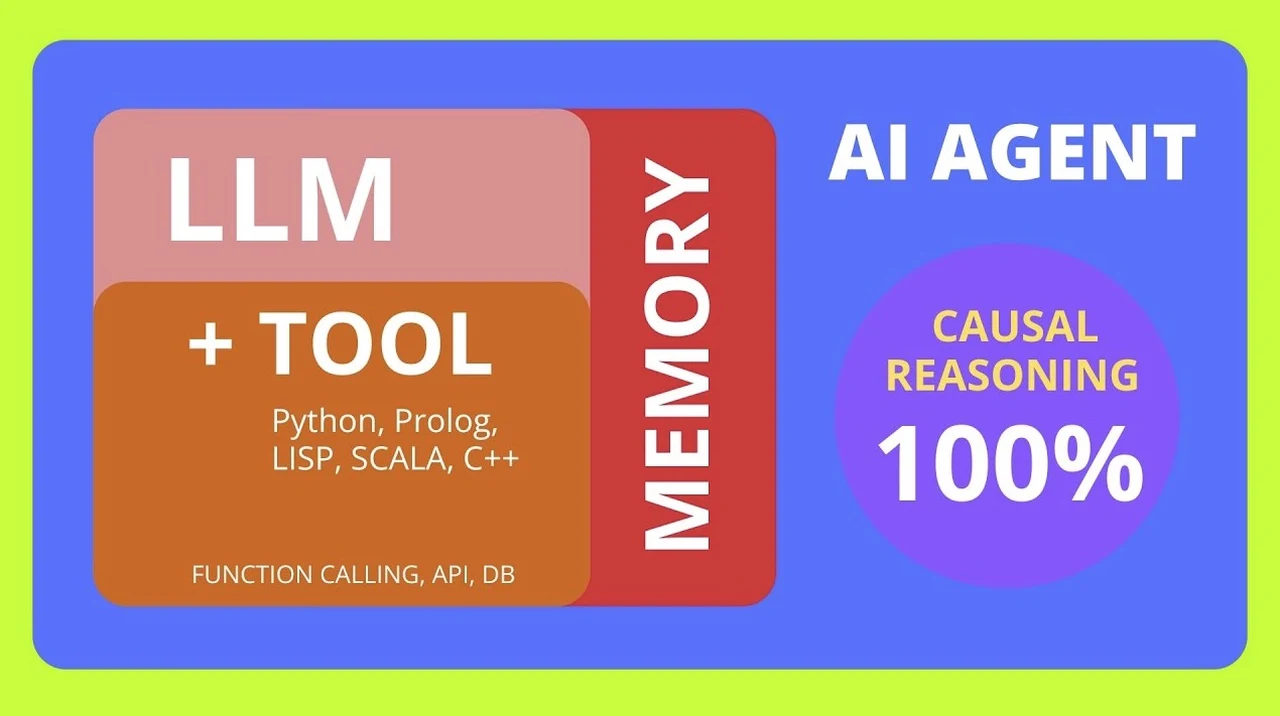

- The approach focuses on improving causal reasoning, enabling AI systems to understand cause-and-effect relationships and make informed decisions.

- Symbolic code, especially Python, is central to ReasonAgain, transforming human questions into reusable functions to systematically challenge AI models.

- Dynamic evaluation techniques are employed to identify inconsistencies in AI reasoning, ensuring models can handle diverse tasks and improve reliability.

- Despite challenges in representing complex reasoning tasks, ReasonAgain’s advancements highlight the potential for AI systems to perform intricate problem-solving with high accuracy.

ReasonAgain represents a paradigm shift in how we assess and enhance AI reasoning capabilities. By integrating symbolic techniques with traditional machine learning approaches, this methodology offers a more comprehensive and nuanced evaluation of AI systems’ cognitive abilities. The implications of this research extend far beyond academic circles, potentially reshaping how AI is developed and applied across various industries.

Understanding Causal Reasoning in AI

Causal reasoning is essential for intelligent behavior, allowing AI systems to grasp cause-and-effect relationships. ReasonAgain aims to achieve perfect causal reasoning performance by using symbolic programs. This ensures AI models understand underlying principles rather than relying on memorization. Such an approach is vital for developing AI systems capable of making informed decisions based on causal relationships.

The ability to reason causally is a cornerstone of human intelligence, and replicating this in AI systems has been a long-standing goal. ReasonAgain’s approach to causal reasoning involves:

- Developing symbolic representations of causal relationships

- Creating test scenarios that challenge AI models to infer cause and effect

- Evaluating the consistency and accuracy of AI responses across various contexts

By focusing on causal reasoning, ReasonAgain addresses a critical gap in current AI capabilities, potentially leading to more robust and reliable AI systems in complex decision-making scenarios.

The Role of Symbolic Code

At the heart of ReasonAgain is the use of symbolic code, particularly Python, to transform human questions into reusable functions. This symbolic approach lets researchers systematically challenge AI models, revealing their reasoning capabilities. By introducing symbolic changes, the methodology tests whether AI systems can adapt and maintain consistent reasoning, a key factor in overcoming memorization limitations.

The integration of symbolic code offers several advantages:

- Increased precision in defining reasoning tasks

- Greater flexibility in modifying and scaling test scenarios

- Enhanced ability to trace and analyze AI decision-making processes

This approach bridges the gap between traditional rule-based AI systems and modern machine learning models, combining the strengths of both paradigms to create more versatile and powerful AI reasoning systems.

Perfect Reasoning for every AI AGENT

Dive deeper into AI Reasoning with other articles and guides we have written below.

Dynamic Evaluation Techniques

ReasonAgain employs a dynamic and robust evaluation methodology, using symbolic changes to uncover inconsistencies in AI reasoning. This flexible framework is crucial for testing AI models under various conditions, making sure they can handle diverse reasoning tasks. By identifying and addressing these inconsistencies, researchers can boost the reliability and accuracy of AI reasoning.

The dynamic evaluation process involves:

- Generating a wide range of test cases through symbolic manipulation

- Assessing AI performance across different problem domains and complexity levels

- Iteratively refining AI models based on identified weaknesses and inconsistencies

This approach allows for a more comprehensive assessment of AI reasoning capabilities, going beyond traditional benchmarks to probe the limits of AI understanding and adaptability.

Addressing Limitations

Despite its advancements, ReasonAgain faces challenges in symbolically representing problems, especially in spatial and causal reasoning. Overcoming these limitations requires innovative solutions to model complex reasoning tasks accurately. By tackling these challenges, researchers aim to create AI systems capable of understanding and solving intricate problems.

Some of the key limitations and ongoing research areas include:

- Developing more sophisticated symbolic representations for abstract concepts

- Improving the integration of spatial reasoning capabilities

- Enhancing the system’s ability to handle ambiguity and uncertainty in reasoning tasks

Addressing these limitations is crucial for expanding the applicability of ReasonAgain across a broader range of AI reasoning challenges.

Real-World Applications

The practical implications of ReasonAgain are significant, emphasizing the need for AI systems equipped with the right tools for reasoning tasks. With these tools, even small, local LLMs can achieve high reasoning accuracy, showcasing the potential for widespread application. This capability is crucial for developing AI systems that can efficiently and effectively perform complex reasoning tasks.

Potential real-world applications include:

- Medical diagnosis and treatment planning

- Financial risk assessment and decision-making

- Environmental modeling and climate change prediction

- Legal analysis and case law interpretation

By enhancing AI reasoning capabilities, ReasonAgain opens up new possibilities for AI deployment in critical and complex domains, potentially transforming how we approach problem-solving across various industries.

Future Prospects

Looking ahead, exploring spatial reasoning for robotic applications presents a promising research direction. By focusing on the right tools, researchers can overcome current AI reasoning limitations, paving the way for more advanced systems. ReasonAgain highlights the importance of symbolic reasoning in AI, offering a path for small-scale models to perform complex tasks with precision.

Future research directions may include:

- Integrating ReasonAgain with other AI technologies like reinforcement learning

- Developing domain-specific symbolic representations for specialized reasoning tasks

- Exploring the potential of ReasonAgain in multi-agent AI systems

As the field of AI continues to evolve, methodologies like ReasonAgain will play a crucial role in shaping the next generation of intelligent systems, pushing the boundaries of what’s possible in AI reasoning and decision-making.

ReasonAgain represents a significant advancement in enhancing AI reasoning capabilities. By using symbolic reasoning and evaluation techniques, researchers can develop AI systems that understand and solve complex problems, moving beyond memorization to achieve true intelligence. This breakthrough has the potential to transform various sectors, from healthcare to finance, by allowing more sophisticated and reliable AI-driven decision-making processes. Read the official research paper over on the Arxiv website.

Media Credit: Discover AI

Filed Under: AI, Top News

Latest TechMehow Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, TechMehow may earn an affiliate commission. Learn about our Disclosure Policy.