This is because Apple decided to support a protocol of Rich Communication Services (RCS) that doesn’t support end-to-end encryption. A good app that you might want to use is WhatsApp which will completely encrypt iOS users text messages to an Android user and vice versa. Texts sent from one iOS user to another iOS user, or from one Android user to another Android user, are encrypted end-to-end.

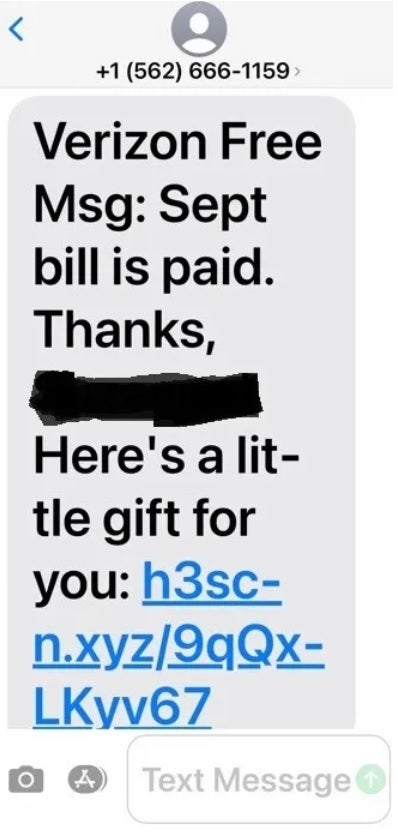

Bogus text sent to Verizon subscribers seeking personal information. | Image credit-Tom’s Hardware

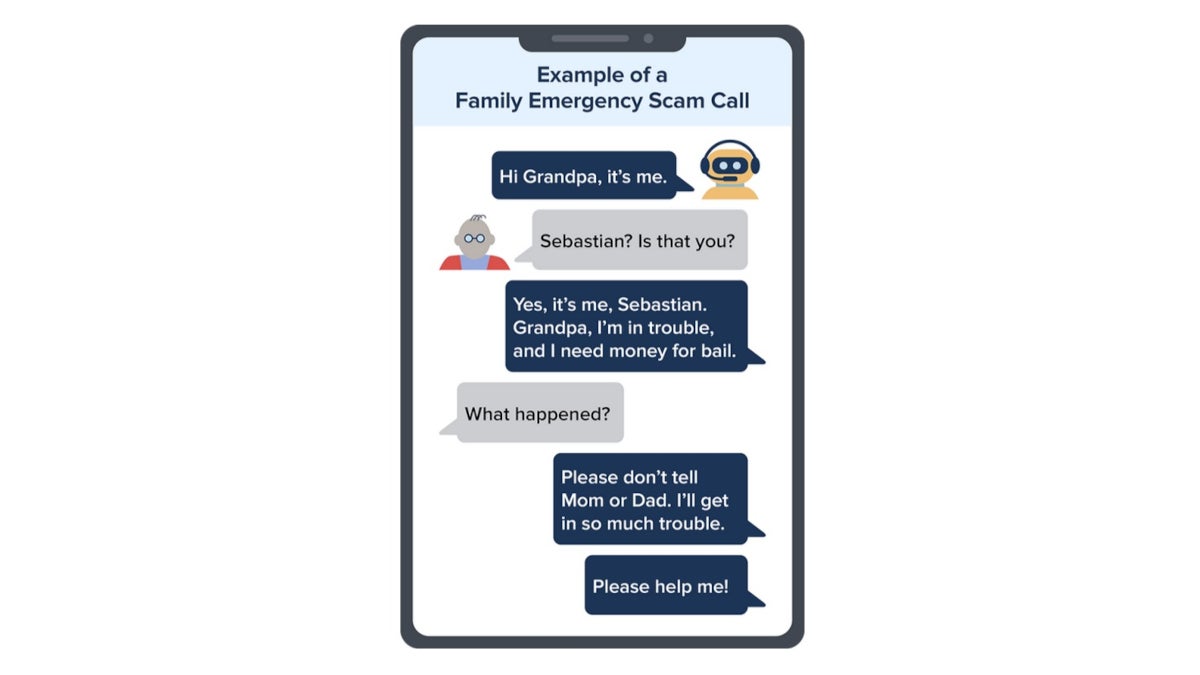

The G-men warn that generative AI can be used in scams to:

- Produce photos to share with victims to help convince them that they are speaking with a real person.

- Create images of celebrities and social media personalities to show them promoting fraudulent activities.

- Create an audio clip of a loved one in a crisis situation requesting financial aid.

- Produce video clips of company executives and law enforcement.

- Create video clip to prove that an online contact is a real person.

Come up with a secret word that only your family and you know to prove if your loved one is really in danger if you receive an AI-generated scam call.

Never, never, never share sensitive information with people you meet online or over the phone.

While you wouldn’t want the FBI cracking open your phone, you can heed their advice and keep your personal data safe. That in turn will keep attackers from accessing your financial accounts.